library(tidyverse)beginR: Joining, Reshaping & Reproducible Reports

Data and other downloads

Data derived from Brazilian E-Commerce Public Dataset by Olist provided on Kaggle.com under a CC BY-NC-SA 4.0 license.

Cleaned data courtesy of the Tidy Tuesday project

Today

This workshop covers topics from:

- Joining / Merging datasets

bind_rowsandbind_colsinner_join- “Outer Joins” -

full_join,left_join,right_join

- Reshaping data

pivot_longerpivot_wider

- Review of Previous Topics

- Starting a New Project

- Loading the tidyverse

- Importing Data

- Graphics using ggplot

- Data transformations

- Summarizing Data

- Data Transformations

- Reproducible Reports using R Markdown

Motivation: Joining and Reshaping with tidyr

In most of our lessons so far, we’ve typically focused on a single dataset. This week, we’ll cover different methods for combining multiple datasets and transforming the shape of our data.

When using data that includes similar observational units collected by different sources, we will often find ourselves with multiple datasets that need to be combined before we can begin analysis. For example, if we wanted to gather various pieces of information for all of the countries in the world, we’d need to merge or join multiple datasets coming from the UN, the World Bank, the World Health Organization, etc. Or, we may simply need to add observations to an existing dataset (e.g. if we have multiple datasets coming from the World Bank, but each dataset only covers a single year.)

In other cases, we might need to reshape data we already have to make it more appropriate for other software, for analysis, or easier to use within R. If you’re planning to export data from R to another software package, you may need a particular format. For example, mapping software like ArcGIS needs each row of a dataset to represent a geographic location.

Merging / Joining Dataframes

Appending

Sometimes we’ll have two datasets with similar columns that we need to combine. Essentially, we are stacking the rows of those datasets on top of each other. We can combine rows in dplyr with bind_rows:

Dataset 1

a0 <- data.frame("StudentID"=c(1,2),

"GPA_change"=rnorm(2,0,1))

print(a0) StudentID GPA_change

1 1 0.95696840

2 2 -0.05614435Dataset 2

a1 <- data.frame("StudentID"=c(3,4),

"GPA_change"=rnorm(2,0,1),

"Semester"=c("Spring","Fall"))

print(a1) StudentID GPA_change Semester

1 3 -0.6413317 Spring

2 4 -0.8293941 FallDatasets combined

bind_rows(a0,a1) StudentID GPA_change Semester

1 1 0.95696840 <NA>

2 2 -0.05614435 <NA>

3 3 -0.64133165 Spring

4 4 -0.82939413 FallWhen we refer to merging or joining, we usually do not mean appending or adding observations to a dataset in this way. Instead, joining usuallly intends to add columns or variables to our dataframe. R does have a bind_cols function, but in the context below, using bind_cols is unhelpful and results in mismatched records.

b0 <- data.frame("name"=c("Marcos","Crystal"),

"year"=c(1993,1996))

b1 <- data.frame("name"=c("Crystal","Marcos"),

"project_num"=c(6,3))

bind_cols(b0,b1)New names:

• `name` -> `name...1`

• `name` -> `name...3` name...1 year name...3 project_num

1 Marcos 1993 Crystal 6

2 Crystal 1996 Marcos 3Instead, we want to add columns while making sure certain identifying variables, often called keys (e.g. name and name1 above) line up correctly. That way, each row represents information about a single observational unit.

Merging

Keys

To properly line up observations between two datasets, we need a common variable (or group of variables) that uniquely identifies an observation in at least one of the datasets, and identifies it in the same way across both datasets. This variable is usually called a “key”. In our example above, b0 and b1 have the key “name”.

print(b0) name year

1 Marcos 1993

2 Crystal 1996print(b1) name project_num

1 Crystal 6

2 Marcos 3Once we have matching key variable(s) in our datasets, we can join our datasets into consistent observations. There are two major categories of joins - “Inner Joins” and “Outer Joins”.

Types of Joins

We need several different types of joins to facillitate the different ways datasets are organized.

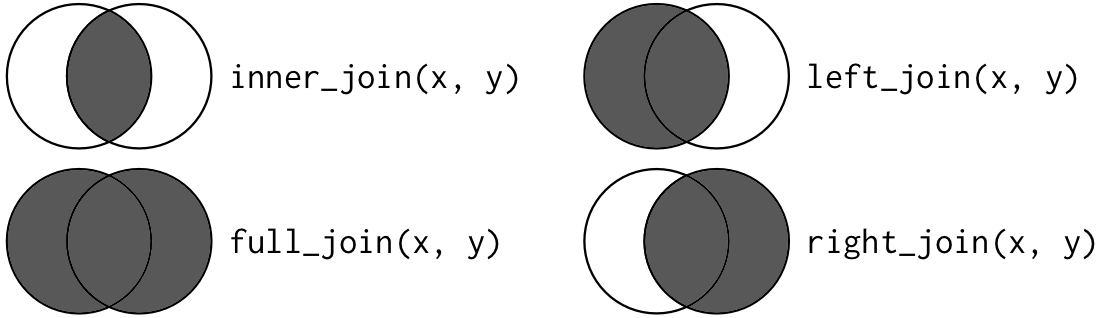

| Join Type | Want to keep | Function |

|---|---|---|

| Inner Join | Only the rows in both datasets | inner_join() |

| Full (Outer) Join | All of the rows | full_join() |

| Left (Outer) Join | All of the rows in the first (left) dataset, only the matches from the second (right) dataset | left_join() |

| Right (Outer) Join | All of the rows in the second (left) dataset, only the matches from the first (right) dataset | right_join() |

The join types can be represented as Venn Diagrams. The lighter parts of the circles represent unmatched records we are leaving out of the join, while the darker parts represent both matched and unmached records we are keeping in the join.

Take a look at these two datasets:

b0 <- data.frame("name"=c("Marcos","Crystal","Devin","Lilly"),

"year"=c(1993,1996,1985,2001))

b1 <- data.frame("person"=c("Marcos","Crystal","Devin","Tamera"),

"project_num"=c(6,3,9,8))print(b0) name year

1 Marcos 1993

2 Crystal 1996

3 Devin 1985

4 Lilly 2001print(b1) person project_num

1 Marcos 6

2 Crystal 3

3 Devin 9

4 Tamera 8Now, let’s merge them using each of the different join types.

Inner Join

inner_join

inner_join(b0,b1,by=c("name"="person")) name year project_num

1 Marcos 1993 6

2 Crystal 1996 3

3 Devin 1985 9Outer Joins

full_join

full_join(b0,b1,by=c("name"="person")) name year project_num

1 Marcos 1993 6

2 Crystal 1996 3

3 Devin 1985 9

4 Lilly 2001 NA

5 Tamera NA 8left_join

left_join(b0,b1,by=c("name"="person")) name year project_num

1 Marcos 1993 6

2 Crystal 1996 3

3 Devin 1985 9

4 Lilly 2001 NAright_join

right_join(b0,b1,by=c("name"="person")) name year project_num

1 Marcos 1993 6

2 Crystal 1996 3

3 Devin 1985 9

4 Tamera NA 8Note: All of these join functions come from the dplyr package, so we can use them with pipes ( %>% )

Extensions

Multiple Keys

In some cases, we may need multiple keys to uniquely identify an observation. For example, our datasets below have two different observations for each person, so we need to join them by both name and year.

multi0 <- data.frame("name"=c("Tamera","Zakir","Zakir","Tamera"),

"year"=c(1990,1990,1991,1991),

"state"=c("NC","VA","VA","NY"))

multi1 <- data.frame("person"=c("Tamera","Zakir","Zakir","Tamera"),

"year"=c(1990,1990,1991,1991),

"project_num"=c(6,3,9,8))

multijoined <- inner_join(multi0,multi1,

by=c("name"="person","year"="year"))

multijoined name year state project_num

1 Tamera 1990 NC 6

2 Zakir 1990 VA 3

3 Zakir 1991 VA 9

4 Tamera 1991 NY 8Many-to-one and One-to-many Joins

If we only specify enough key variables to uniquely identify observations in one dataset and not the other, each unique value from the first dataset will be joined to each instance of that value in the other dataset.

statedata <- data.frame("state"=c("NC","VA","NY"),

"region"=c("Southeast","Southeast","Northeast"))

inner_join(statedata,multijoined,by=c("state"="state")) state region name year project_num

1 NC Southeast Tamera 1990 6

2 VA Southeast Zakir 1990 3

3 VA Southeast Zakir 1991 9

4 NY Northeast Tamera 1991 8Note: We can equivalently omit by=c("state"="state") since the key variables have the same name here.

Reshaping with tidyr

Once we have a single dataset, we may still need to change its shape. When we talk about the shape of a dataset, we are often referring to whether it is wide or long.

Wide dataset - typically includes more columns and fewer rows with a single row for each observation

Long dataset - typically includes fewer columns and more rows with multiple rows for each observation

The many functions used in R for changing a datasets’ shape have varied over the years along with the terminology (e.g. “reshape”, “melt”, “cast”, “tidy”, “gather”, “spread”, “pivot”, etc.) We will now be working with the new reshaping functions recommended by tidyverse, pivot_longer() and pivot_wider().

Hadley Wickham’s Tidy Data provides more discussion of what “Tidy Data” entails and why it’s useful in data analysis.

Use Case: Making ggplot easier

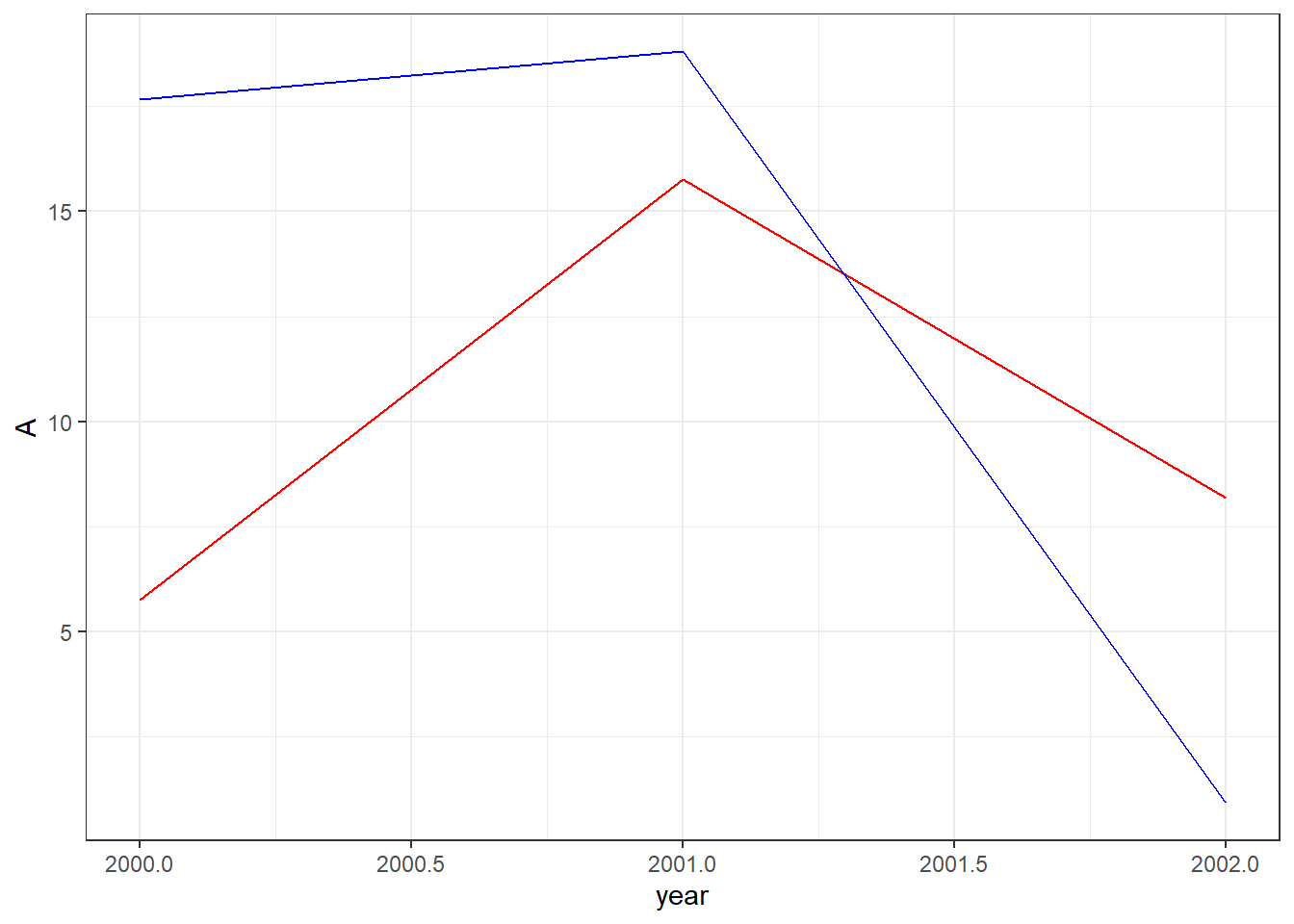

Why reshape when we have perfectly good data? Sometimes R’s own functions are more convenient with reshaped data. Consider the dataset below in which we’ve generated random numbers for columns A and B.

set.seed(123)

df0 <- data.frame(year=c(2000,2001,2002),

A=runif(3,0,20),

B=runif(3,0,20))

print(df0) year A B

1 2000 5.751550 17.66035

2 2001 15.766103 18.80935

3 2002 8.179538 0.91113Now we want to create a line plot in which each column has its own line. Because our dataset is wide, we’ll need to add each line to the plot separately and individually.

ggplot(data=df0,aes(x=year)) +

geom_line(aes(y=A),color="red") +

geom_line(aes(y=B),color="blue") +

theme_bw()

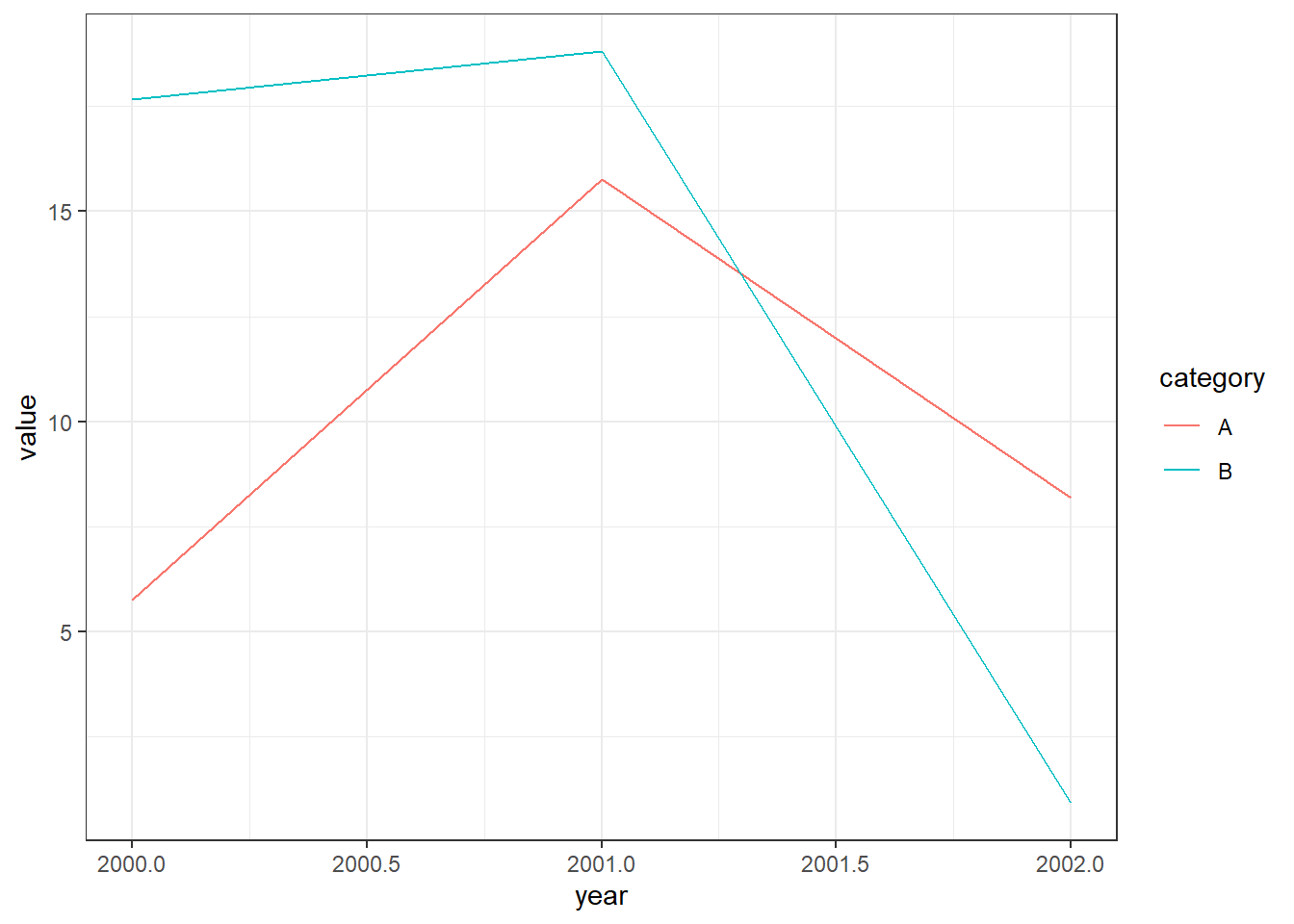

However, if we were using a long dataset, we could create the plot with less code. Note how our dataset below has a single column for categories A and B.

df1 <- data.frame(year=c(df0$year,df0$year),

category=c("A","A","A","B","B","B"),

value=c(df0$A,df0$B))

print(df1) year category value

1 2000 A 5.751550

2 2001 A 15.766103

3 2002 A 8.179538

4 2000 B 17.660348

5 2001 B 18.809346

6 2002 B 0.911130And for our plot, we only need to add a single geom_line while using the category column for our color aesthetic.

ggplot(data=df1,aes(x=year,y=value,color=category))+

geom_line()+

theme_bw()

This may not seem like much of a big deal for this particular plot, but what if we were trying to plot hundreds of lines?

We can move back and forth between the long shape used by df1 and the wide shape used by df0 with tidyr’s pivot_longer and pivot_wider functions.

pivot_longer

First, let’s create a small example dataset with four columns of randomly generated numbers.

set.seed(123)

raw <- data.frame(

City=c("Raleigh","Durham","Chapel Hill"),

x2000=rnorm(3,0,1),

x2001=rnorm(3,0,1),

x2002=rnorm(3,0,1),

x2003=rnorm(3,0,1)

)

print(raw) City x2000 x2001 x2002 x2003

1 Raleigh -0.5604756 0.07050839 0.4609162 -0.4456620

2 Durham -0.2301775 0.12928774 -1.2650612 1.2240818

3 Chapel Hill 1.5587083 1.71506499 -0.6868529 0.3598138x2000 through x2003 represent something measured in 2000 to 2003, since R data.frames cannot have labels that start with numbers.

In the dataset above, we have information stored in separate columns for each year, but it might be more useful to store the year number itself in its own column. We can use the pivot_longer function to do this.

pivot_longer takes four main arguments:

data: this must be a data frame.

cols: a list of column names that we want to pivot into a single column.

names_to: the name of the new column that will contain the names of the columns we are pivoting.

values_to: the name of another new column that will contain values of the columns we are pivoting.

We can also use various other arguments with pivot_longer that allow us to do a number of handy things. For example, the names_prefix argument lets us remove the “x” in front of our year names. Run ?pivot_longer in your console for a full list of arguments that can be used.

longpivot <- raw %>%

pivot_longer(cols = starts_with("x"),

names_to = "Year",

values_to = "Value",

names_prefix = "x")

print(longpivot)# A tibble: 12 × 3

City Year Value

<chr> <chr> <dbl>

1 Raleigh 2000 -0.560

2 Raleigh 2001 0.0705

3 Raleigh 2002 0.461

4 Raleigh 2003 -0.446

5 Durham 2000 -0.230

6 Durham 2001 0.129

7 Durham 2002 -1.27

8 Durham 2003 1.22

9 Chapel Hill 2000 1.56

10 Chapel Hill 2001 1.72

11 Chapel Hill 2002 -0.687

12 Chapel Hill 2003 0.360 It’s often useful to think about how transformations like pivot_longer change the effective observational unit of the dataset. This dataset started with city-level observations in which each row was represented by a unique city name. The transformed data frame longpivot now observes one city in a given year in each row, so we now have multiple rows for the same city.

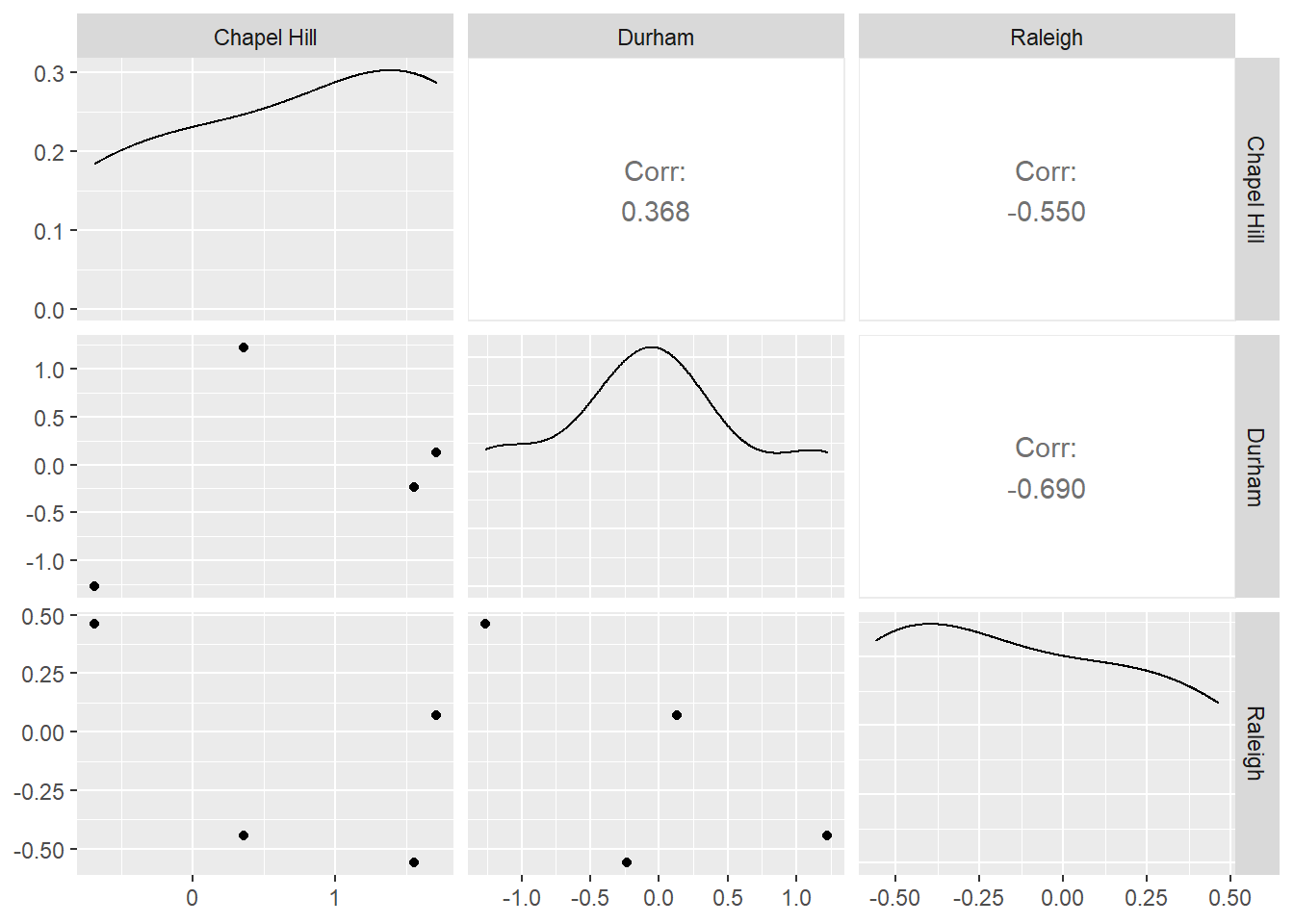

pivot_wider

We can reverse our previous transformation with pivot_wider. This time, we’ll create a separate column for each city.

pivot_wider takes three essential arguments:

data

names_from: the column whose values we’ll use to create new column names.

values_from: another column whose values we’ll use to fill the new columns.

widepivot <- longpivot %>%

pivot_wider(names_from = City, values_from = Value)

print(widepivot)# A tibble: 4 × 4

Year Raleigh Durham `Chapel Hill`

<chr> <dbl> <dbl> <dbl>

1 2000 -0.560 -0.230 1.56

2 2001 0.0705 0.129 1.72

3 2002 0.461 -1.27 -0.687

4 2003 -0.446 1.22 0.360Why would we want each city to have its own column? Well, it might be a useful step to determine which cities are most closely correlated as part of an Exploratory Data Analysis process. For example, we can now easily use this dataset with ggally to create a plot matrix.

library(GGally)

widepivot %>%

select("Chapel Hill","Durham","Raleigh") %>%

ggpairs()

Motivation: Reproducible Reports

R Markdown provides a straightforward way to create reports that combine code and the output from that code with text commentary. This allows for the creation of automated, reproducible reports. R Markdown can knit together your analysis results with text and output it directly into HTML, PDF, or Word documents. In fact, we have been using R Markdown to generate the webpage for all of our R Open Labs workshops!

RMarkdown Structure

R Markdown has three components.

- An (optional) header in a language called YAML. This allows you to specify the type of output file and configure other options.

- R code chunks wrapped by

``` - Text mixed with simple formatting markup.

To create a new R Markdown document (.Rmd), select File -> New File -> R Markdown.

You will have the option to select the output: we’ll use the default HTML for this workshop. Give the document a title and enter your name as author: this will create the header for you at the top of your new .html page! RStudio will create a new R Markdown document filled with examples of code chunks and text.

Header

At the top of the page is the optional Yet Another Markup Language (YAML) header. This header is a powerful way to edit the formatting of your report (e.g. figure dimensions, presence of a table of contents, identifying the location of a bibliography file).

At the top of the page is the optional Yet Another Markup Language (YAML) header. This header is a powerful way to edit the formatting of your report (e.g. figure dimensions, presence of a table of contents, identifying the location of a bibliography file).

Code Chunks

R code chunks are surrounded by

R code chunks are surrounded by ```. Inside the curly braces, it specifies that this code chunk will use R code (other programming languages are supported), then it names this chunk “setup”. Names are optional.

After the name, you specify options on whether you want the code or its results to be displayed in the final document. For this chunk, the include=FALSE options tells R Markdown that we want this code to run, but we do not want it to be displayed in the final HTML document. The R code inside the chunk knitr::opts_chunk$set(echo = TRUE) tells R Markdown to display the R code along with the results of the code in the HTML output for all code chunks below.

Use CTRL+ALT+i (PC) or CMD+OPTION+i (Mac) to insert R code blocks.

Formatted Text

This is plain text with simple formatting added. The ## tells R Markdown that “R Markdown” is a section header. The ** around “Knit” tells R Markdown to make that word bold.

The RStudio team has helpfully condensed these code chunk and text formatting options into a cheatsheet.

You can get pretty far with options in the R Markdown cheatsheet, but R Markdown is a very powerful, flexible language that we do not have time to fully cover. More detailed references are:

https://www.rstudio.com/wp-content/uploads/2015/03/rmarkdown-reference.pdf

Visual R Markdown

Newer versions of R Studio provide a visual editor for R markdown documents. This can be accessed by toggling between the “Source” and “Visual” options in the top left corner of your Rmd script editor pane. (Note: In some older versions of R Studio, this is available as a compass-shaped icon in the top right corner instead).

Once activated, this interface is similar to a word processing software like Microsoft Word - shortcuts for bolding, italics, etc. are usually the same and there are icons and drop down menus available for lists, bullets, links, and more.

You can still use CTRL+ALT+i (PC) or CMD+OPTION+i (Mac) to insert R code blocks, or use the Insert>Code Chunk>R menu in the visual editor.

Read more about the visual editor here:

https://rstudio.github.io/visual-markdown-editing/

Generating the HTML document

Click the Knit button, and R Studio will generate an HTML report based on your R Markdown document.

Let’s try creating an R Markdown document to explore the US cheese consumption data and review what we learned in weeks 1-3.

###Data Import

consumption <- read_csv("data/clean_cheese.csv")Rows: 48 Columns: 17

── Column specification ────────────────────────────────────────────────────────

Delimiter: ","

dbl (17): Year, Cheddar, American Other, Mozzarella, Italian other, Swiss, B...

ℹ Use `spec()` to retrieve the full column specification for this data.

ℹ Specify the column types or set `show_col_types = FALSE` to quiet this message.Useful functions for exploring dataframes

Include one of the following in your document. We’ve used eval = FALSE here to prevent this code chunk from running!

head(consumption)

tail(consumption)

summary(consumption)Tables and knitr::kable

By default, R Markdown will display tables the way they appear in the R console. We can use knitr::kable function to get cleaner tables.

knitr::kable(head(consumption), caption = "The first six rows of the cheese consumption data")| Year | Cheddar | American Other | Mozzarella | Italian other | Swiss | Brick | Muenster | Cream and Neufchatel | Blue | Other Dairy Cheese | Processed Cheese | Foods and spreads | Total American Chese | Total Italian Cheese | Total Natural Cheese | Total Processed Cheese Products |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1970 | 5.79 | 1.20 | 1.19 | 0.87 | 0.88 | 0.10 | 0.17 | 0.61 | 0.15 | 0.41 | 3.32 | 2.20 | 7.00 | 2.05 | 11.37 | 5.53 |

| 1971 | 5.91 | 1.42 | 1.38 | 0.92 | 0.94 | 0.11 | 0.19 | 0.62 | 0.15 | 0.41 | 3.55 | 2.31 | 7.32 | 2.29 | 12.03 | 5.86 |

| 1972 | 6.01 | 1.67 | 1.57 | 1.02 | 1.06 | 0.10 | 0.22 | 0.63 | 0.17 | 0.56 | 3.51 | 2.62 | 7.68 | 2.59 | 13.01 | 6.13 |

| 1973 | 6.07 | 1.76 | 1.76 | 1.03 | 1.06 | 0.11 | 0.21 | 0.66 | 0.18 | 0.65 | 3.31 | 2.68 | 7.83 | 2.80 | 13.49 | 5.99 |

| 1974 | 6.31 | 2.16 | 1.86 | 1.09 | 1.18 | 0.11 | 0.23 | 0.70 | 0.16 | 0.61 | 3.42 | 2.92 | 8.47 | 2.95 | 14.41 | 6.34 |

| 1975 | 6.04 | 2.11 | 2.11 | 1.12 | 1.09 | 0.09 | 0.24 | 0.74 | 0.16 | 0.57 | 3.35 | 3.35 | 8.15 | 3.23 | 14.27 | 6.69 |

Adding a new variable

We’ve covered two ways to add a new variable to a dataframe.

Note: R allows non-standard variable names that include spaces, parentheses, and other special characters. The way to refer to variable names that contain wonky symbols is to use the backtick symbol `, found at the top left of your keyboard with the tilde ~.

The base R way covered in lesson 1 using the $ operator and with() function

#Base R way, covered in lesson 1

consumption$amer_ital_ratio <- with(consumption, `Total American Cheese` / `Total Italian Cheese`)Error in eval(substitute(expr), data, enclos = parent.frame()): object 'Total American Cheese' not foundOops. Better check the variable names.

consumption$amer_ital_ratio1 <- with(consumption, `Total American Chese` / `Total Italian Cheese`)The tidyverse way covered in lesson 3 using the mutate() function

#Tidyverse way, covered in lesson 3

consumption <- mutate(consumption, amer_ital_ratio2 = `Total American Chese` / `Total Italian Cheese`)Selecting Columns

consumption <- select(consumption, Year, Cheddar, Mozzarella, `Cream and Neufchatel`)Renaming Columns

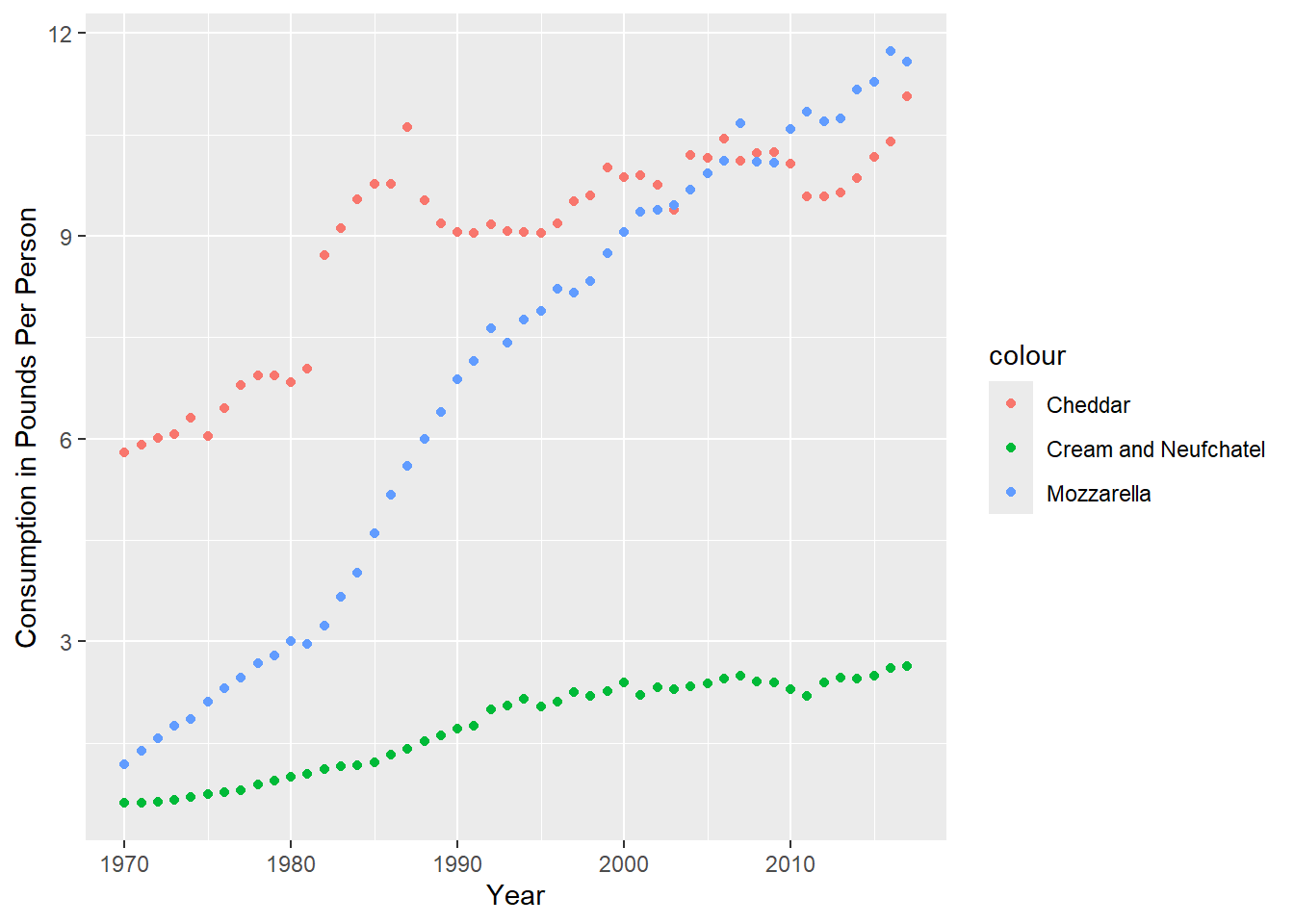

consumption <- rename(consumption, Cream_and_Neufchatel = `Cream and Neufchatel`)Plotting

ggplot(consumption, aes(x = Year)) +

geom_point(aes(y = Cheddar, col = "Cheddar")) +

geom_point(aes(y = Mozzarella, col = "Mozzarella")) +

geom_point(aes(y = Cream_and_Neufchatel, col = "Cream and Neufchatel")) +

ylab("Consumption in Pounds Per Person")

Bibliography

R Markdown also provides a nifty way to incorporate a bibliography and references. We’ll haven an example of this in the exercises, but here’s a brief summary of the steps required to use a BibTex bibliography.

- Create a plain-text .bib file in the same directory as your .Rmd R Markdown document.

- Fill that .bib file with BibTex citations. The Citation Machine can generate the BibTex citations for you.

- Make sure each citation has a unique citation-key, the first entry

- Add a bibliography field to the YAML header that tells R Markdown the name of your bibliography file, for example

bibliography: references.bib - Add citations throughout the document using square brackets.

Exercises

Merge and Reshape

All of today’s exercises involve the datasets included in brazilian-commerce-subset.zip.

Read olist_public_dataset_v2.csv into R. Explore the dataset. If necessary, refer to the metadata provided here.

Read product_category_name_translation.csv into R. Merge this dataframe into olist_public_dataset_v2.csv using

product_category_nameas the key variable.Let’s explore which products are most frequently purchased together:

Use

group_byandsummarizeto find the totalorder_items_qtyfor eachproduct_category_name_englishin eachcustomer state. ReviewUse

pivot_widerto create a new dataframe with a row for eachcustomer_stateand a column for eachproduct_category_name_english. Name this dataframeproducts.Run the two lines of code below (make sure your dataframe from step 4 is called

products!)products <- ungroup(products) #remove grouping products[is.na(products)] <- 0 #replace missing data with zeroesUse

ggpairsor other Exploratory Data Analysis techniques to look for relationships between purchases ofsmall_appliances,consoles_games,air_conditioning, andconstruction_tools_safety. (Remember to runlibrary(GGally)before usingggpairs).Repeat problems 3-6 with

order_products_value(i.e. the amount spent vs the quantity purchased). Do you see different patterns? Explore other product categories.Use

pivot_longerand thenpivot_widerto convert yourproductsdataframe to one where each row is a differentproduct_category_name_englishand each column represents a differentcustomer_state.Choose 5 states. Which of these states have the most similar patterns of spending (as measured by correlation)?

R Markdown

Download the cheese RStudio Project file and extract the R Project contained within. Then, knit the cheeseConsumption.Rmd report. It should generate an HTML report for you.

In the cheeseConsumption.Rmd file, find the code chunk named setup. change

echo=FALSEtoecho=TRUE. Try knitting the document again. What changed? Did this affect the whole document?In the cheeseConsumption.Rmd file, find the code chunk named import. change

message=FALSEtomessage=TRUE. Try knitting the document again. What changed? Did this affect the whole document?Create another R Markdown document analyzing cheese production data contained in the state_milk_productions.csv file. You can use the data dictionary found here to make sense of the different variables. Hint: you’ll need to use the group-by %>% summarize idiom we learned in Week 3 to sum up all the state level data within each year. You’ll probably want to feed the output from that group-by %>% summarize step into knitr::kable() to get a prettier table for your report.

Once you have created an R Markdown report analyzing cheese production, send the entire R Project to a friend (or us!) and ask them to knit that .Rmd document. If they have RStudio and the tidyverse installed, they should be able to seamlessly generate the exact report you generated, without having to make any changes.